How To Avoid False Negatives In Your Growth Experiments

This is a problem we see over and over again in the start-up world and beyond. A client believes they have tested an approach, or a marketing channel, and found it didn’t work. But once we look more closely at what they did, we find that they have prematurely called the results of the experiment and that, in actual fact, they need more data to make a decision.

The problem with doing this is that it can lead to poor strategic decision making. You may run a flawed experiment, come to flawed conclusions, and then make flawed commercial, strategic or tactical decisions on the back of it.

It’s good practice to run water tight, process-driven experiments in order to avoid false negatives and get a better understanding of your business, your product and your market. In this article we dive into what fale negatives are, how they occur, and how you can avoid them in future.

What Is A False Negative?

A false negative is when you erroneously ‘rule out’ an idea, channel or other experiment.

Generally this happens when you have not gathered enough data, but what you have seen ‘appears’ to provide proof that the experiment has failed.

I’ll provide an example. A company I have worked with was running Google Ads, Facebook Ads and podcast sponsorship for their B2C product. A month after the campaign started, it looked like Google Search Ads were providing a £16 cost per acquisition (CPA), the Facebook Ads were providing a £40 CPA and the Podcast Sponsorship was providing a £120 CPA.

The company was operating on a tight budget, and was brand new so didn’t have a clear idea what their lifetime user value was. They therefore didn’t know what they could or should be spending to acquire a customer, but wanted to save money where possible.

As a result, they decided that the first month had proven that Facebook Ads and podcast sponsorship were inferior channels for their product than Google Search Ads. The podcast sponsorship was under a three month contract, so they had to keep that running. Instead they decided to stop the Facebook spend altogether and redirect it to Google Ads.

Within a week their Google Ads CPA shot up to £45 and kept rising.

This was because they had made a major channel spend decision based on a false negative. They soon reversed their decision and started spending on Facebook Ads again.

By the end of the three month podcast sponsorship contract, it was clear that all three channels were working together to provide an overall average customer acquisition cost (CAC) of £40, against an LTV of £110. Facebook and podcast sponsorship were filling the top of the funnel by raising awareness. Those users were then researching on Google, and eventually converting either by clicking brand pay per click (PPC) ads or clicking on generic terms having recognised the brand name from elsewhere.

How to Avoid False Negatives

This was an easy mistake to make, and all too common. Modern digital analytics tools can sometimes give you the impression that you can see, at any time, what channels are working best for you.

Sometimes this is the case. But you have to be very careful making decisions like this – perfect attribution is usually impossible to do, because people very rarely see one ad on one channel and click then and there to make a purchase. And some channels need more time than others to begin to move the needle.

Here are the main ways to avoid false negatives in your growth experiments:

1. Use experts to execute the experiments

Almost anyone can set up a FB ads or Google PPC campaign. But there are so, so, so many variables within the set-up that can affect how well the channel performs. Campaign structure, goal optimisation, audience selection, frequency, creative, copy – any one of these could be a point of failure that makes the channel underperform.

We’ve seen this countless times at Growth Division. A client will say to us that they have tried a particular channel, and that it didn’t work for them. But when we take a look at the way they had run the channel, we can immediately see that they’d shot themselves in the foot by not using appropriately skilled people to run the channel.

For a fair experiment, you need to give whatever you are testing the best chance to succeed. And that means ensuring the person implementing it really knows what they’re doing.

2. Use A Proper Growth Experiment Framework

A growth experiment framework is a set of rules and practices that you use to ensure consistent methodology in your experiments. Consistent, sound methodology is vital for fair, useful experiments.

Your growth experiment framework should ensure each experiment has clear intentions, measurables and parameters. Here are some of the steps you should include in your growth experiment framework:

- Define and measure your marketing funnel. This will show you where your weaknesses are, what you need to work on, and what you need to measure. Once you’ve done this you’ll have a better understanding of what areas of the customer journey need your attention and what experiments you should run.

- Define your objectives and key results (OKRs). This will ensure you have practical, measurable goals with clear milestones. Brainstorm ideas. Assemble your team and have a brainstorm to find ideas to hit your OKRs.

- Prioritise your experiments. Give each experiment an ICE score (potential impact, chance of success, effort required). Prioritise the experiments with the best score.

- Give each chosen experiment a proper structure – objective, hypothesis, measurables, timeframe, experiment owner.

We’ve written before in more detail about how to build your own growth framework. It’s important to do this if you’re going to avoid false negatives and get useful results

3. Run The Experiments For Long Enough

This is really crucial. You have to run experiments for long enough to gather statistically significant data.

As in the example I mentioned at the start of this article, it can be tempting to jump to conclusions from limited data sets. Especially when it’s your company’s money your spending on the experiment. If it looks like a lost cause, you’ll feel the pressure to can it quickly.

But this can be a huge error. Some channels need time to bed in (for example, Facebook and Google ads benefit from 2-3 months of activity to give the AI time to learn to optimise the ads, and give the channel owner time to refine their campaigns based on data).

And, depending on your sales cycle, you sometimes can’t tell whether a particular activity or experiment has been successful for months and months.

Unfortunately, there isn’t a ‘one size fits all’ approach for determining the lengths of your experiments. In general, we use 3 months as a benchmark – this works for testing most marketing channels. But some experiments need more time, and some need less. This is where having an experienced strategist at the helm can help – they’ll be able to use their experience and intuition to tell you how long an experiment should run for.

4. Don’t rely too much on digital attribution

This is quite an important one. Modern analytics is incredible and has transformed how marketing is measured. With the right analytics tools, UTM tracking and pixel implementation you can have a good idea of where any conversion has come from.

However – there is a caveat. When a user has interacted with multiple channels and assets of yours, it becomes very tricky to accurately attribute the conversion or sale.Consider this example customer journey: a user sees a LinkedIn post of yours, clicks through to read a blog, forgets about you for a few weeks, hears a friend mention your company, googles you and clicks a search ad before converting.

By default, Google will claim that as a brand PPC conversion. You can adjust the attribution model in analytics, and it will split the conversion value across different channels/touchpoints that the user has interacted with. Points based attribution can go some way to ensuring different channels get some of the credit for the conversion. But it can’t go all the way, and it can usually only track what a user does if they do it on the same browser within a limited period of time.

Plus, this is all getting harder – the Apple IOS update, among other changes, is making third party pixel tracking harder, and forcing attribution windows to shrink from 28 days to just 7 in many cases.

Attribution, therefore, requires judgement as well as analytics. You’ve got to be able to know when to believe what the numbers tell you and when to use your own instinct and experience.

How We Run Experiments Properly

At Growth Division, we have run countless experiments for our clients. All the way from channel level (validating whether a channel works), to campaign structure level (validating whether a particular tactical approach works) to creative level (validating whether a particular messaging, copy or creative approach works).

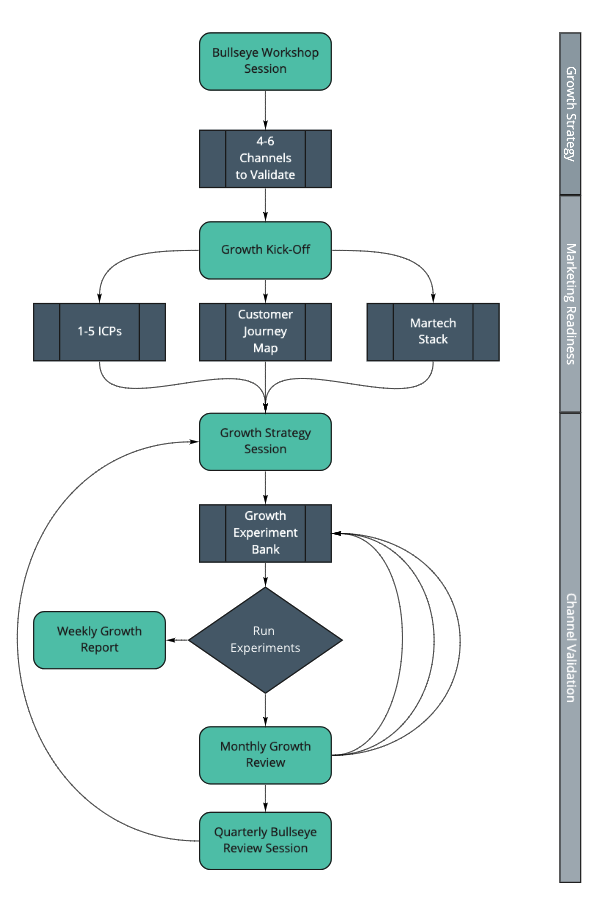

We have formalised this process in our own growth framework:

At Growth Division, we follow the following process:

Part 1: Growth Strategy

During this phase we hold a ‘bullseye’ session with the client to determine what marketing channels we’re going to test and validate. We go through each one of the channels in the bullseye framework and generally decide on 4-6 channels to test over a 3 month period.

Part 2: Marketing Readiness

During this phase we work with the client to prepare 1-5 Ideal Customer Personas. For each of these personas we create a demographic and psychographic profile, upon which we’ll base our messaging ideas. We lead this process, but it is collaborative – the client obviously knows their customer and sector best and their insight is invaluable.

We then create a customer journey map, which outlines all the steps a customer typically takes along the marketing funnel. Finally, we propose and build out a martech stack to ensure the client has all the tools they need to be successful.

Part 3: Channel Validation

We now set up specific tactical experiments in each of the channels we are validating and add them to our growth experiment bank. These experiments are run, with reporting to the client done both weekly and monthly.

As each experiment is completed, we’ll close it off and create a new one. This process of constant experimentation and iterative improvement ensures we never have a situation where a channel is just set up and left to run. We’re actively testing and tweaking all the time to get the most out of each channel.

At the end of each quarter we re-run the bullseye session and decide which channels have worked, which haven’t, and which we’re going to test next.

Talk to a Growth Advisor

We create a clear, focused marketing strategy by combining our expertise with your knowledge of your business.

Related Posts

How to Build Long-Term Sustainable Growth

Learn the essential strategies and practical steps to achieve long-term sustainable growth for your business.

What Is Organic Business Growth?

Organic business growth is a term that is thrown around a lot in the business world. But what exactly...

Fixed Mindset vs Growth Mindset

Discover the fundamental disparities between a fixed mindset and a growth mindset in this insightful article.